Research Interests

“The ultimate display would be a room within which the computer can control the existence of matter” – Ivan E. Sutherland (1965)

Vision: Programmable Matter

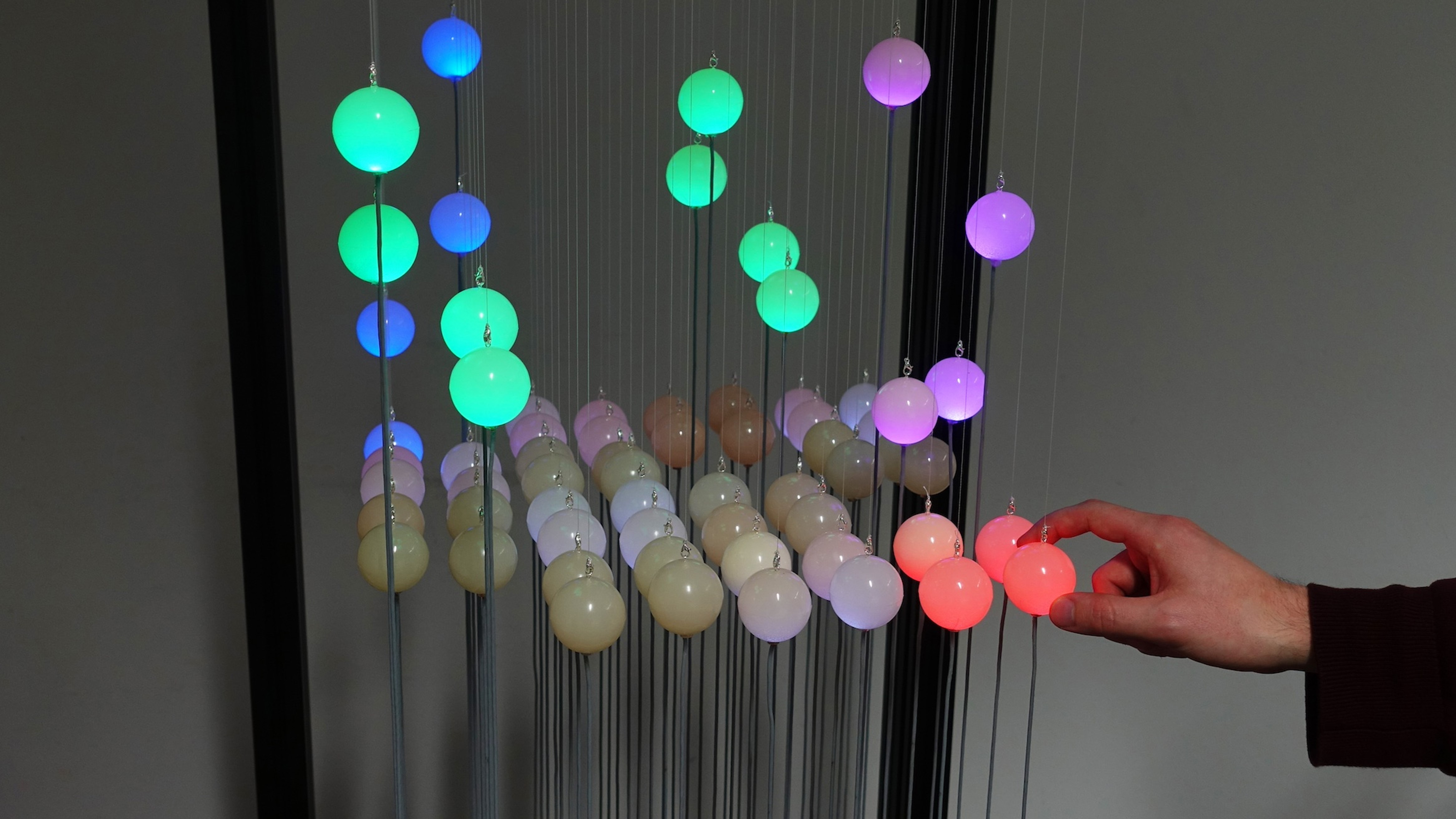

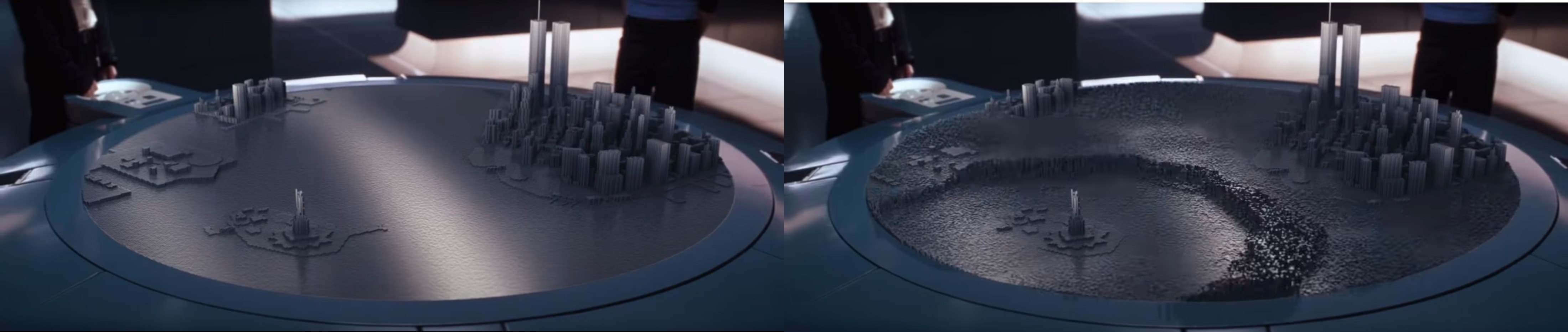

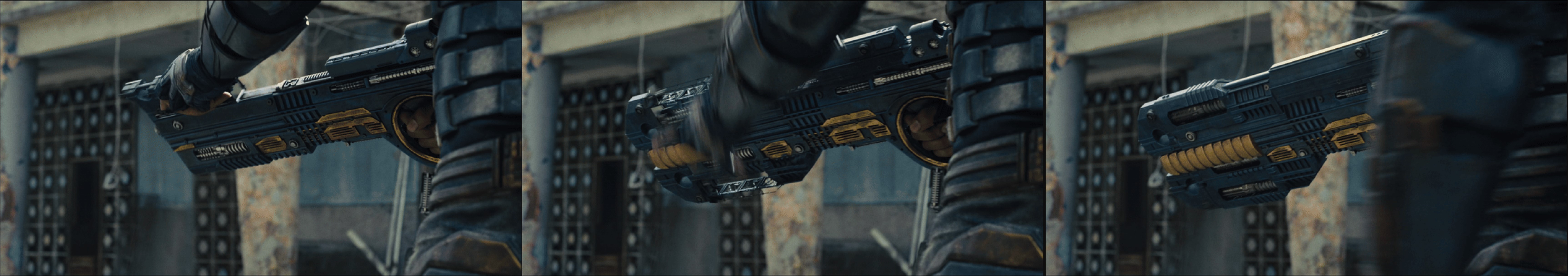

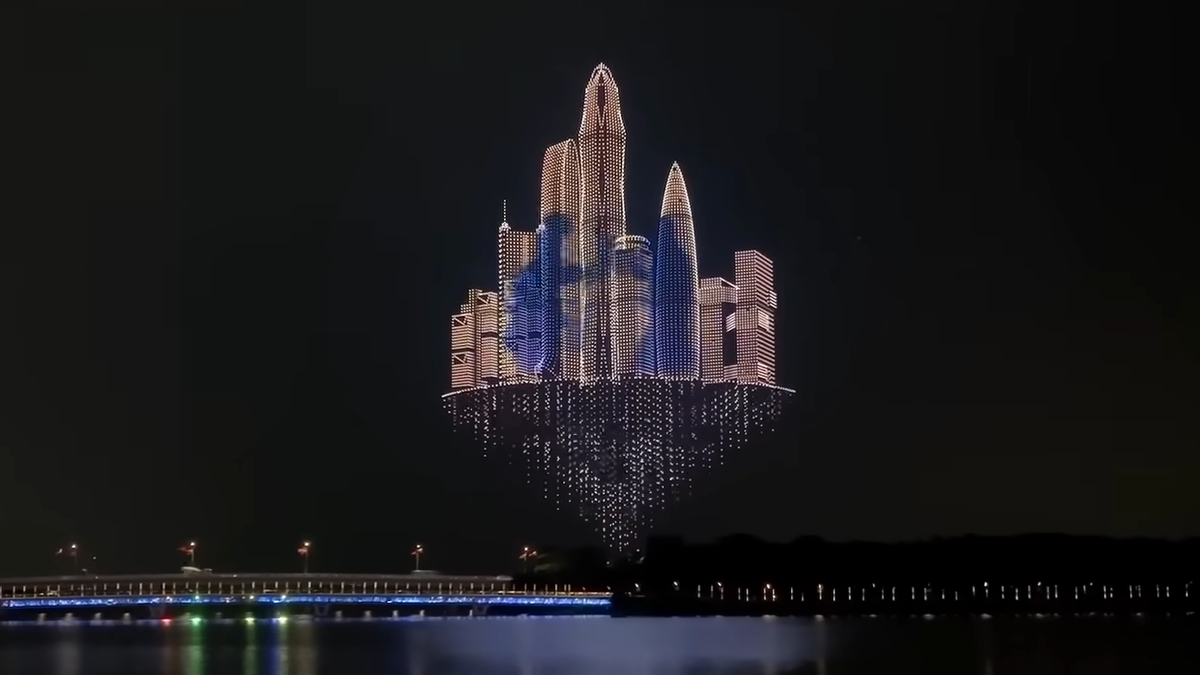

The cinema and entertainment industries often present speculative visions of future technologies, serving as a bridge between scientific discourse and the collective imagination. For instance, many films and television series explore the concept of transformative materials and environments capable of dynamic reconfiguration, as illustrated in Figure 1.

In research, this speculative idea corresponds to what is known as Programmable Matter — a technological paradigm representing the convergence of computation and physical materiality, where algorithms are embedded within matter itself, enabling it to sense, compute, and reconfigure its own structure or properties. The conceptual roots of this vision can be traced back to 1965, when Sutherland envisioned a computer capable of “controlling the existence of matter” [31].

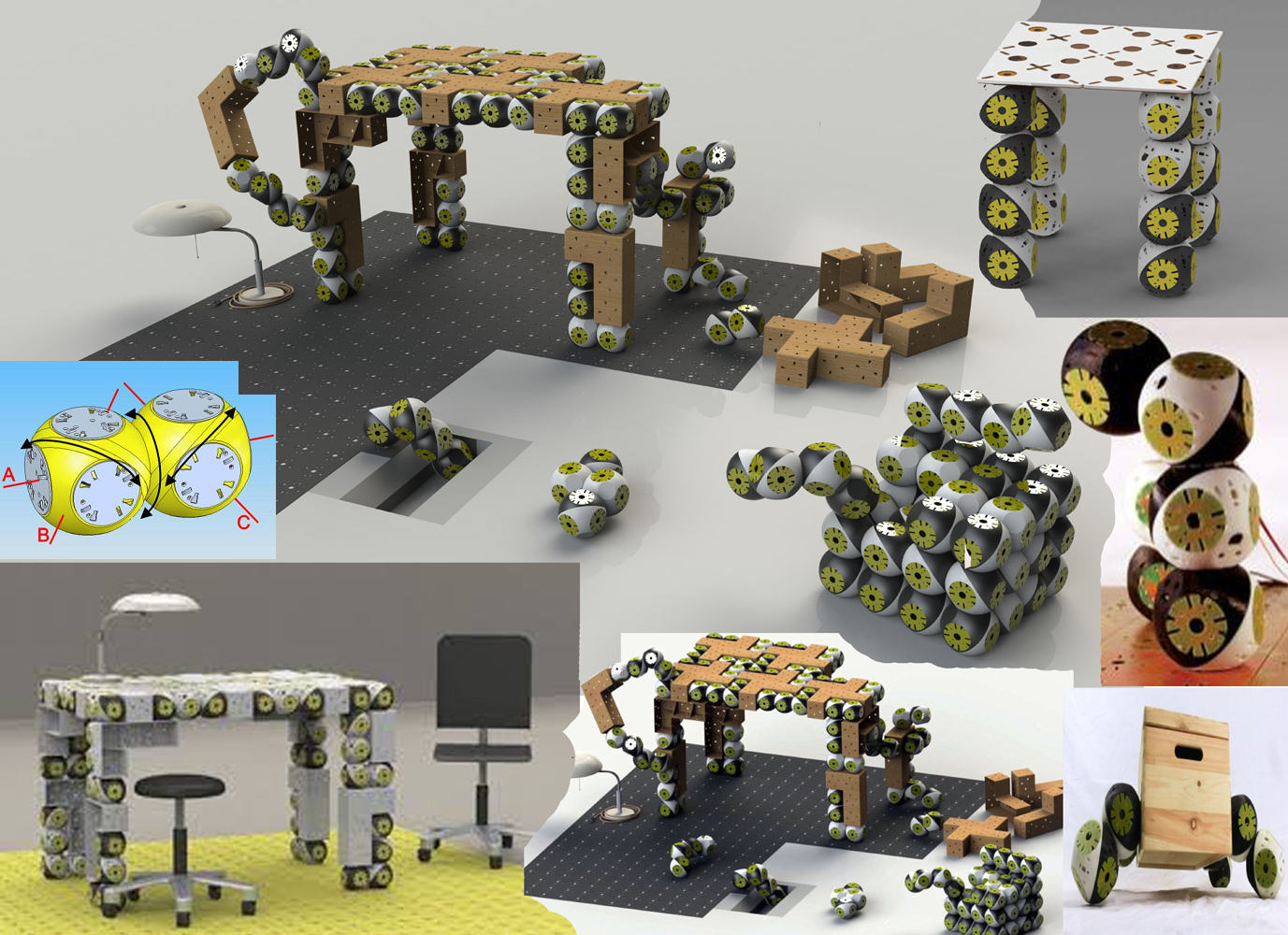

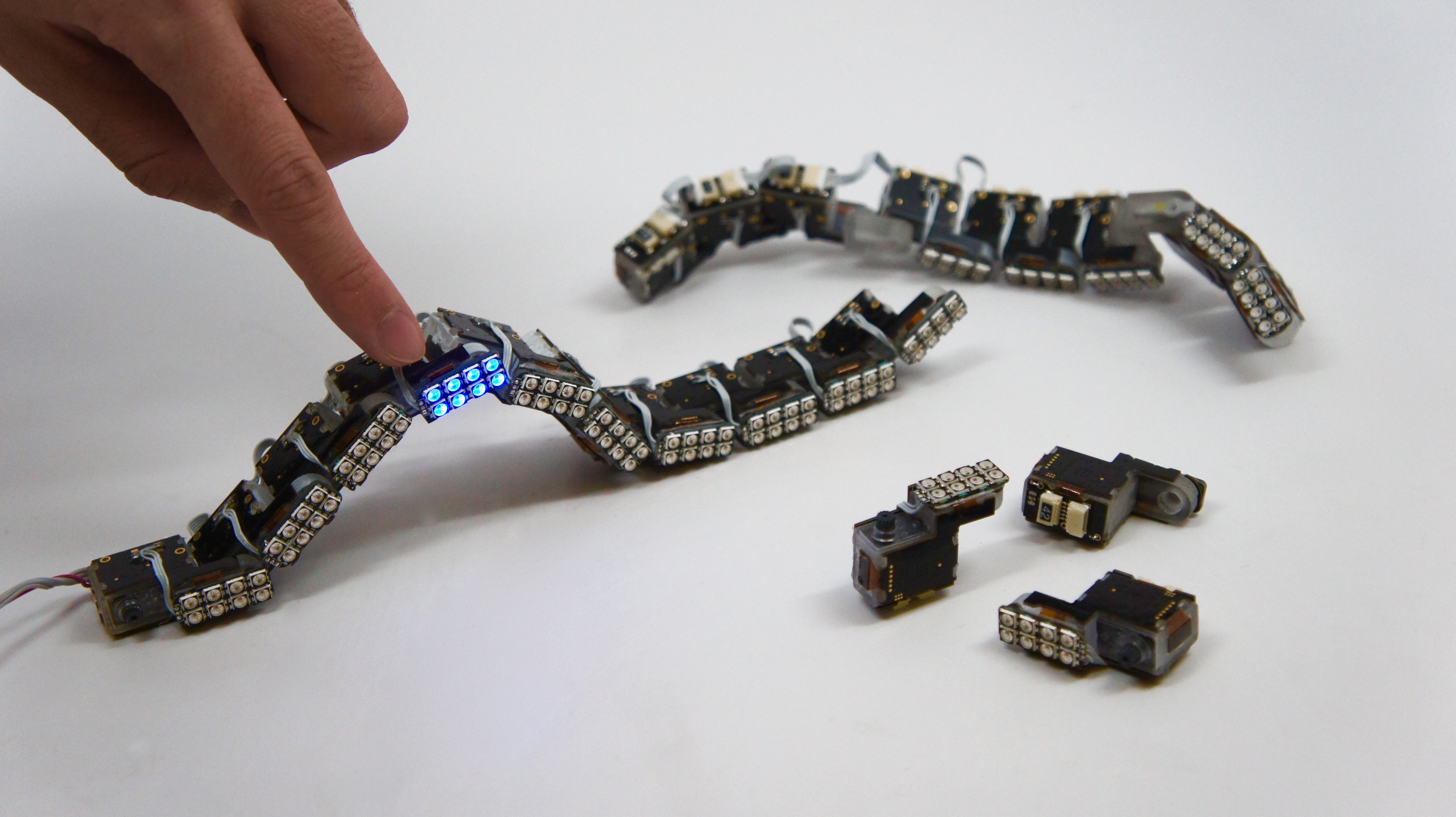

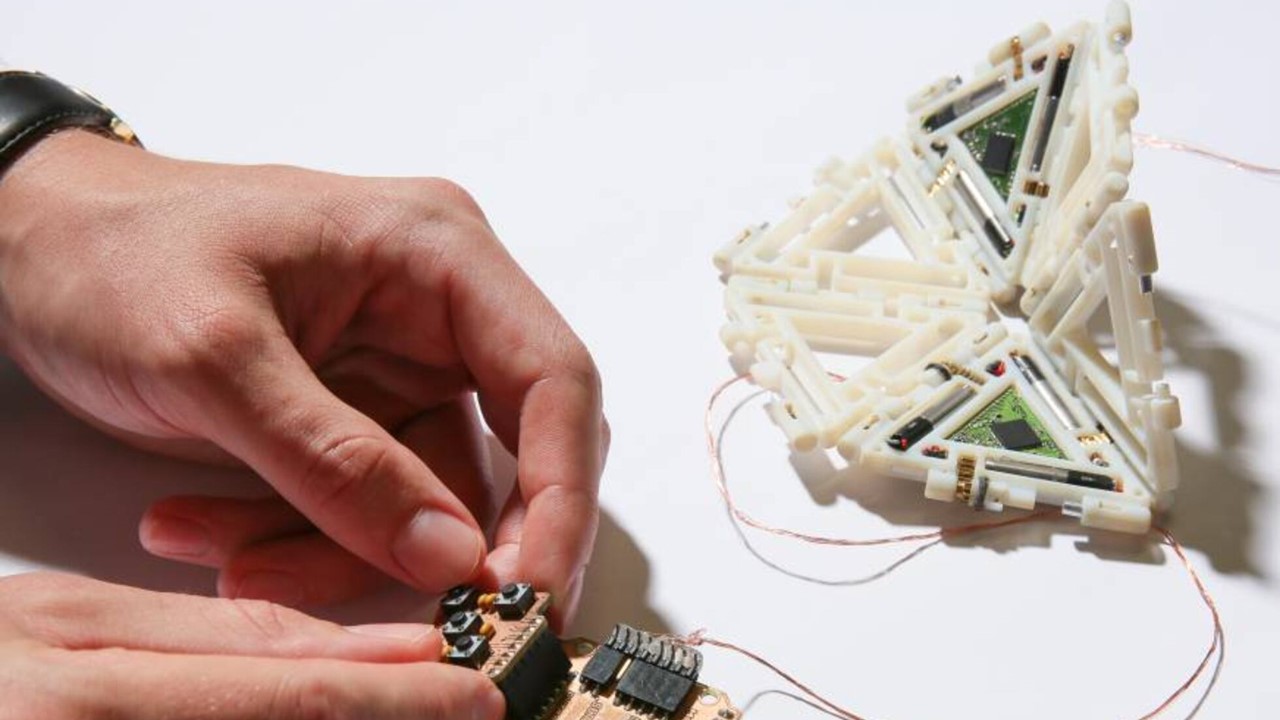

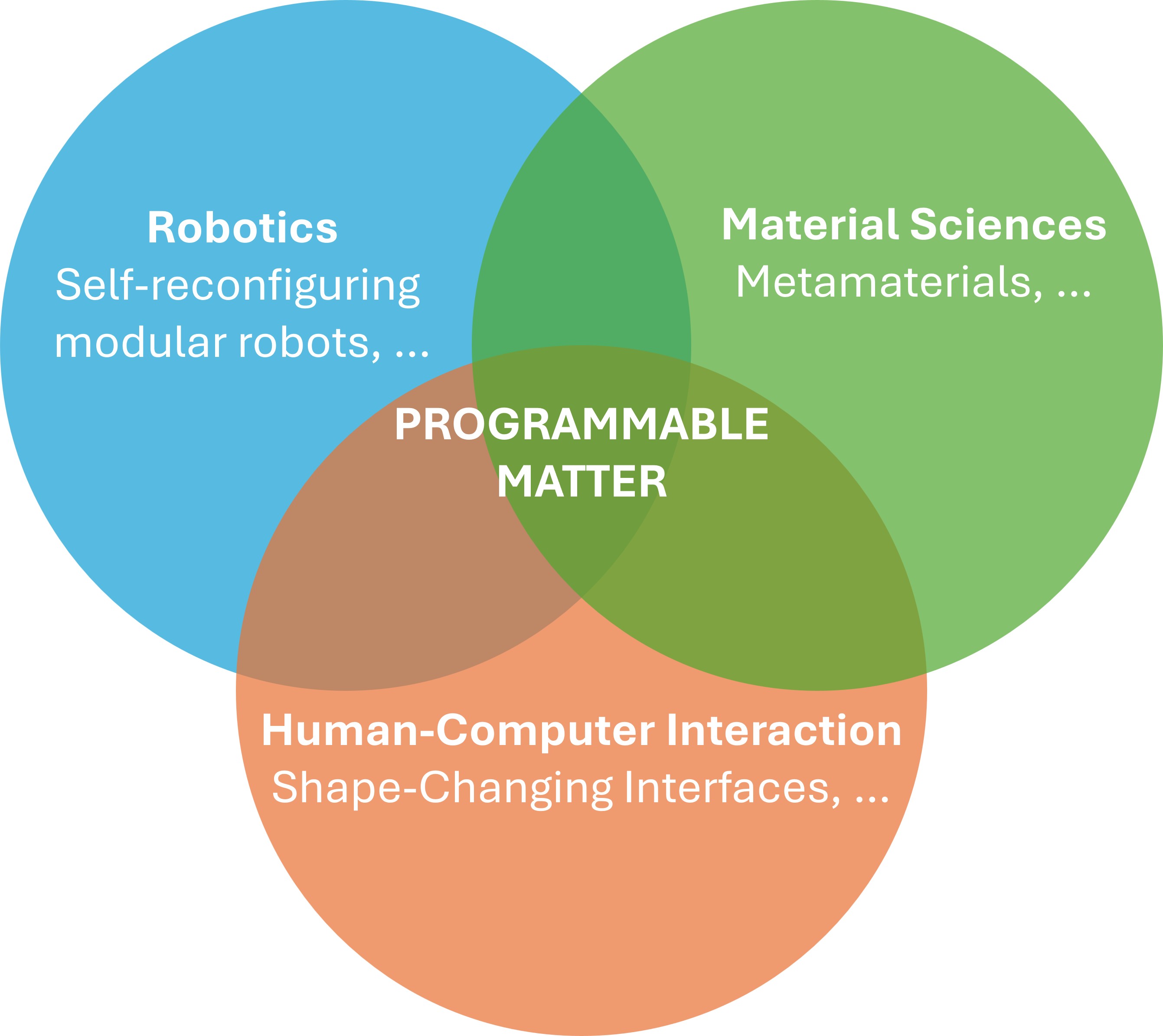

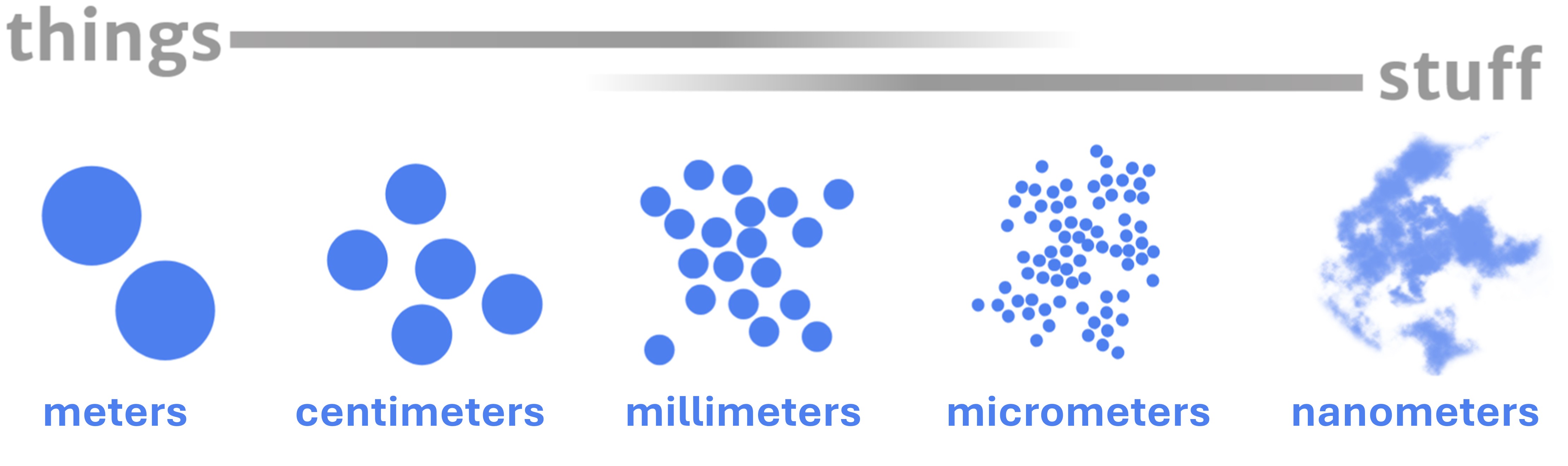

Today, this vision unites a range of research domains as shown in Figure 2 (a), including self-reconfiguring modular robotics within Robotics (e.g., [36]), smart materials and metamaterials in Materials Science (e.g., [21]), and shape-changing interfaces within Human–Computer Interaction (e.g., [24]). Ultimately, these fields converge towards moving from discrete objects (“things”) to continuous, reconfigurable materials (“stuff”) as illustrated in Figure 3.

Moving from discrete objects (“things”) to continuous, reconfigurable materials (“stuff”)

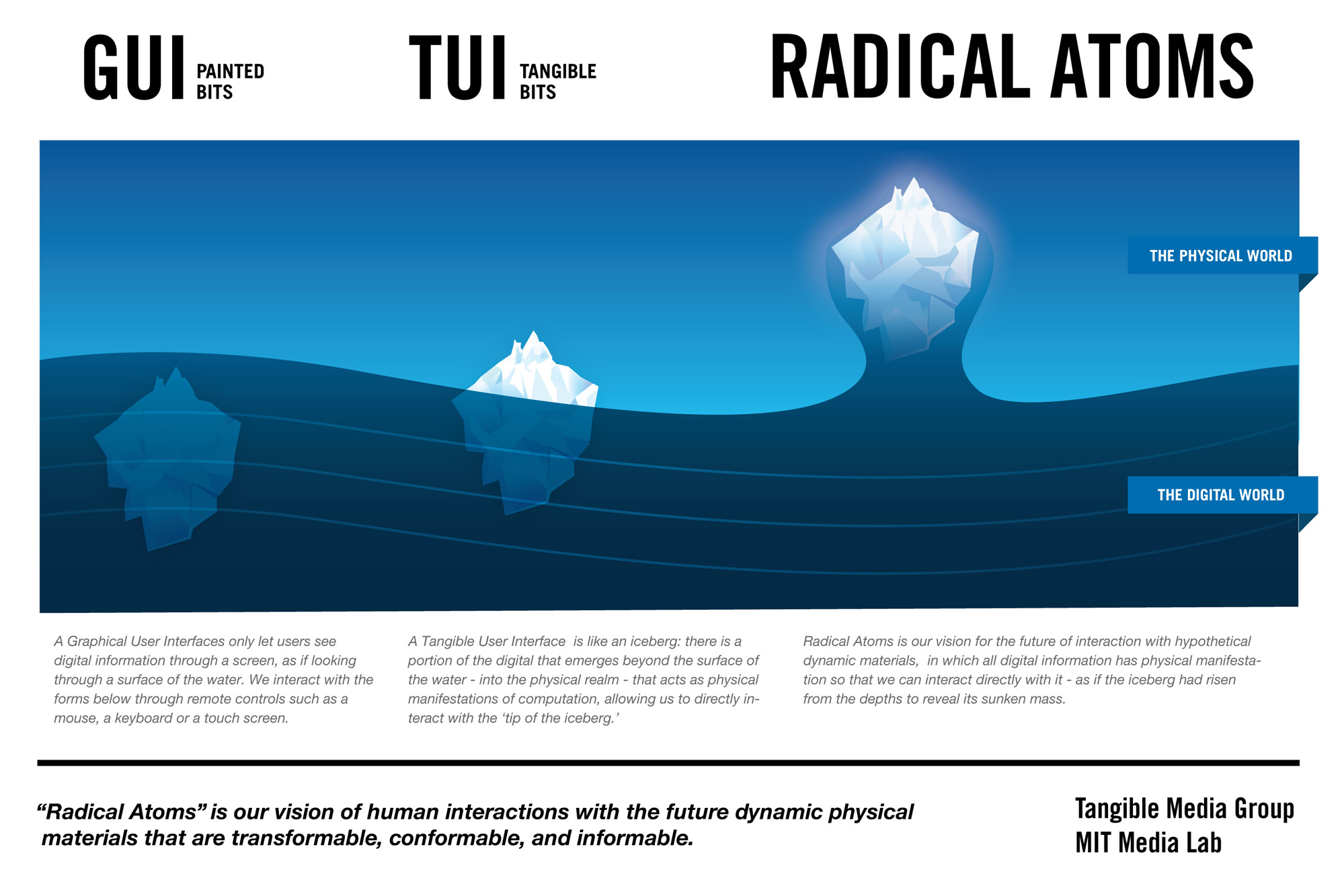

Research Field: Shape-Changing Interfaces

Nowadays, Graphical User Interfaces (GUIs) are ubiquitous in our societies offering flexibility and interactivity through graphical elements within a limited two-dimensional (2D) space (e.g., phones, tablets, laptops). While GUIs provide a high degree of malleability, allowing users to manipulate digital content with relative ease, they are constrained by the abstraction of the 2D screen. In contrast, Tangible User Interfaces (TUIs) [13, 14] leverage physical objects as interactive elements, enabling more natural and embodied interactions. TUIs support collaborative work, facilitate embodied thinking, sustain external memory, and allow parallel actions through spatially distributed interactions [27]. By grounding interactions in the physical 3D world, TUIs leverage human cognitive, motor, and collaborative abilities that have evolved over millennia through hands-on experience with real-world objects.

However, despite these advantages, TUIs are inherently limited in terms of malleability. Unlike GUIs, the physical properties of tangible objects – such as position, orientation, shape, color, and transparency – cannot be easily or dynamically modified through software, which restricts their adaptability and versatility in supporting a wide range of interaction scenarios. Without malleability, TUIs will remain perpetually outpaced by GUIs. Therefore, a key design challenge emerges towards the development of interfaces that combine the flexibility of GUIs with the embodied richness of TUIs.

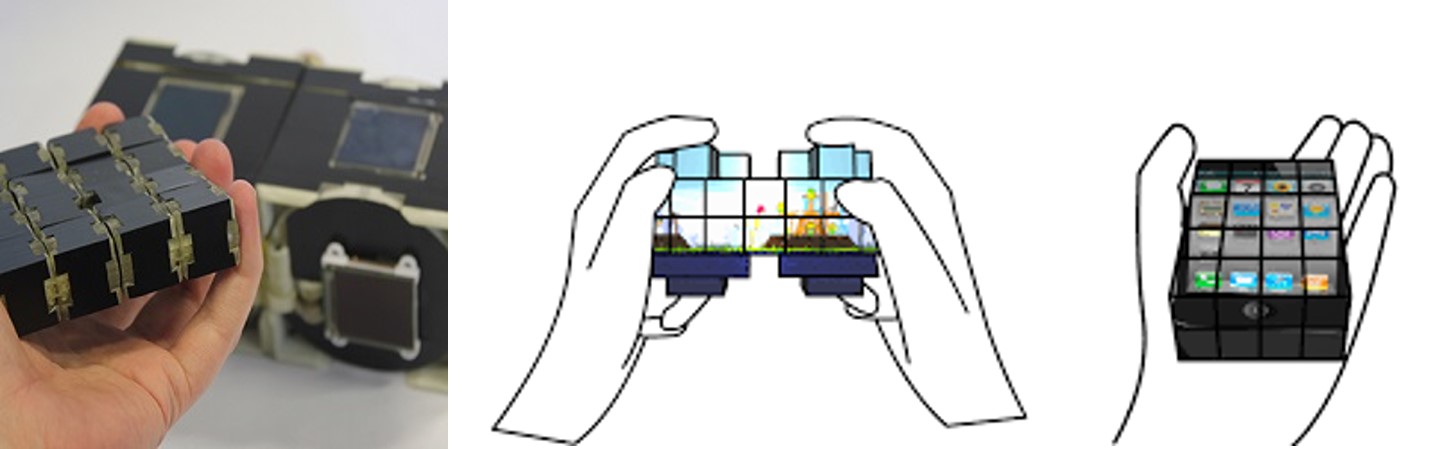

Aligned with Programmable Matter, Shape-changing interfaces (SCIs) aim to blur the boundary between physical and virtual objects, combining the physicality of Tangible User Interfaces (TUIs) with the malleability of Graphical User Interfaces (GUIs) [12, 27, 30] (see Figure 4): They are interactive devices performing physical transformations in response to user inputs or system events, enabling them to convey information, meaning, or affect [1].

Shape-Changing Interfaces in the landscape of human-computer interaction

Purposes and Benefits

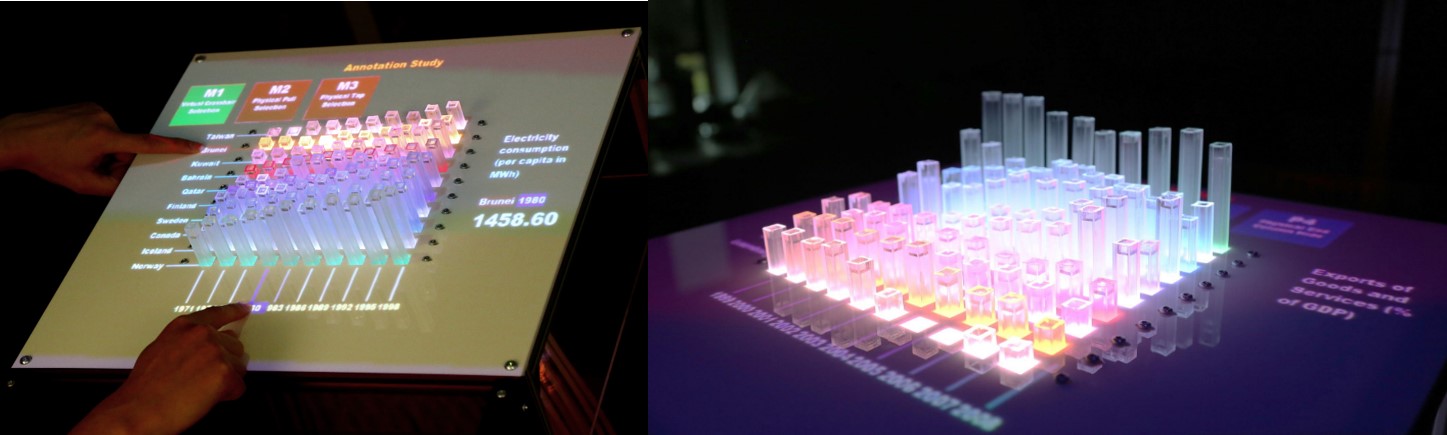

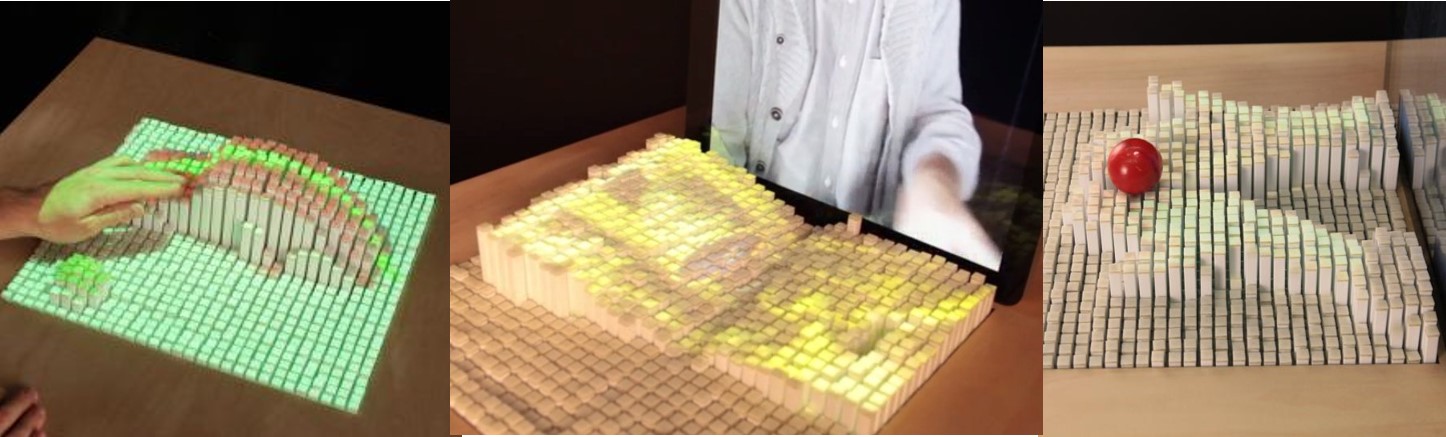

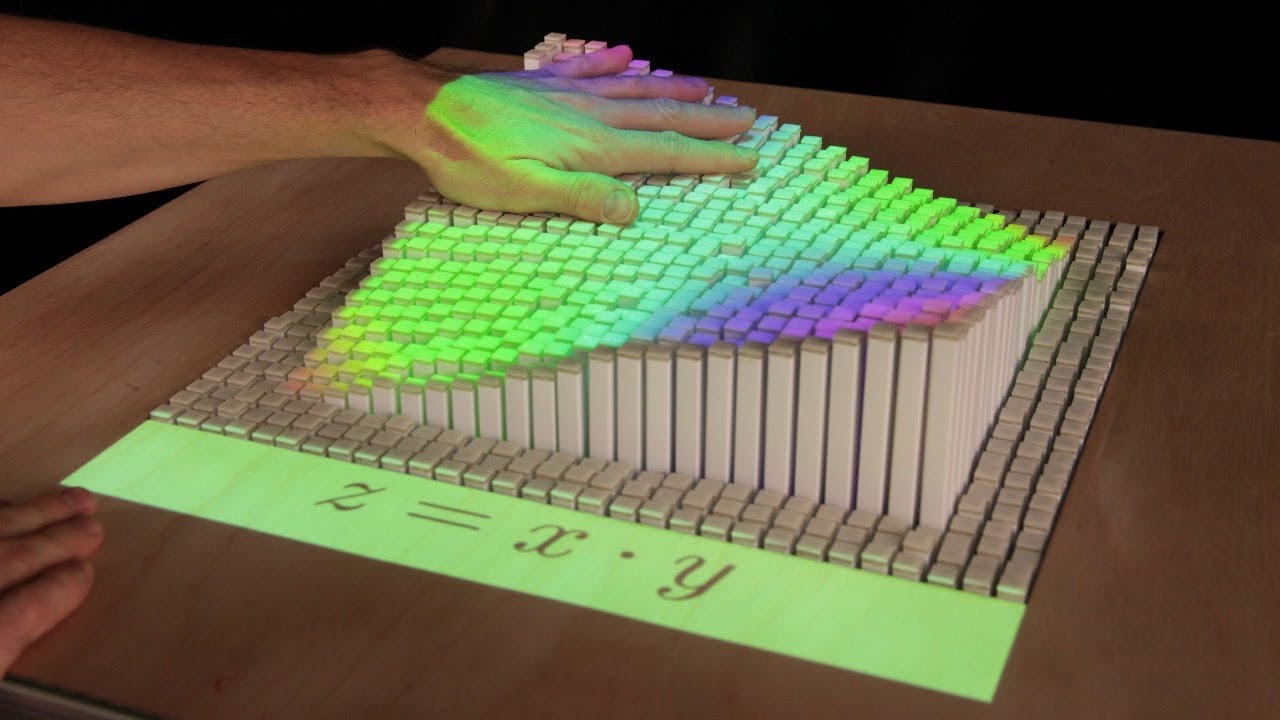

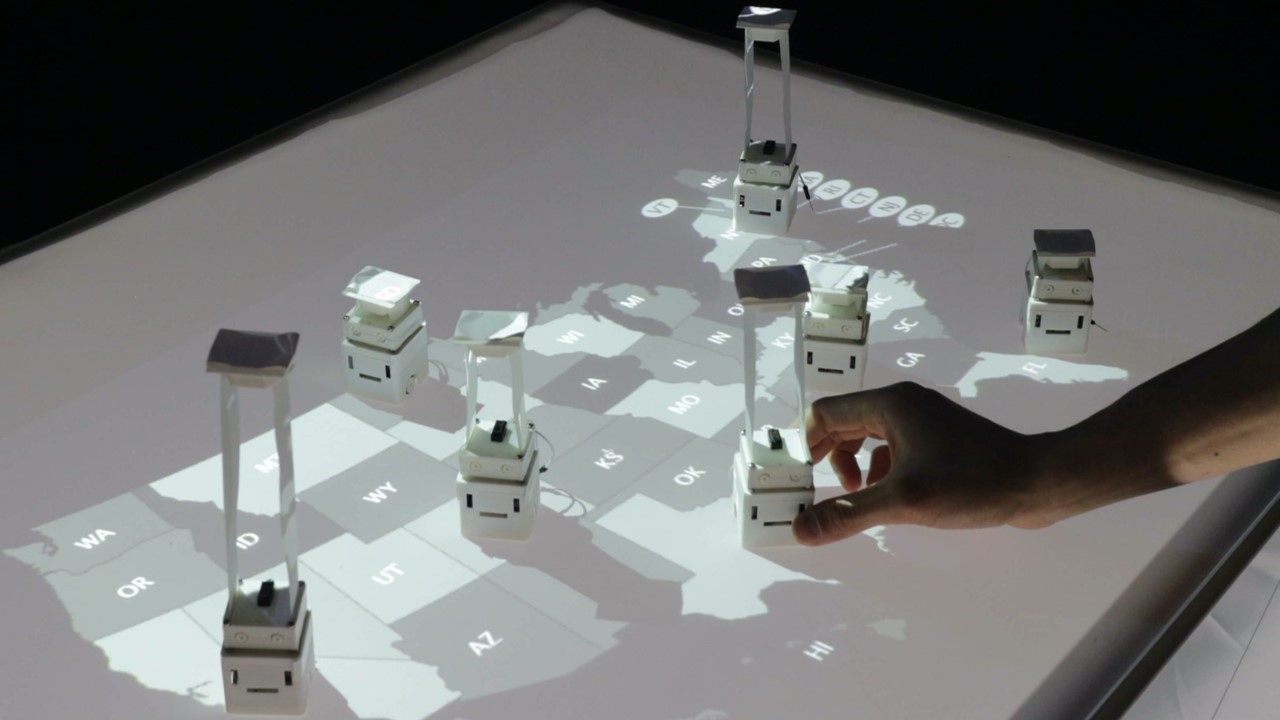

To help researchers and target user-groups see clear benefits in the development of shape-changing interfaces, [1] explored the purposes and benefits from the literature. Five fundamental purposes for SCIs were identified, each illustrated in Figure 5:

- Communicate Information – conveying information through combinations of visual, haptic, and shape animation (see Figure 5 (a)).

- Simulate Objects – physically simulating real or imaginary objects (see Figure 5 (b)).

- Adapt Interfaces – adapting shape to specific interaction contexts (see Figure 5 (c)).

- Augment Users – augmenting elements of or within the user’s body (see Figure 5 (d)).

- Support Arts – supporting aesthetic, sensorial, or entertainment goals (see Figure 5 (e)).

Interaction Types

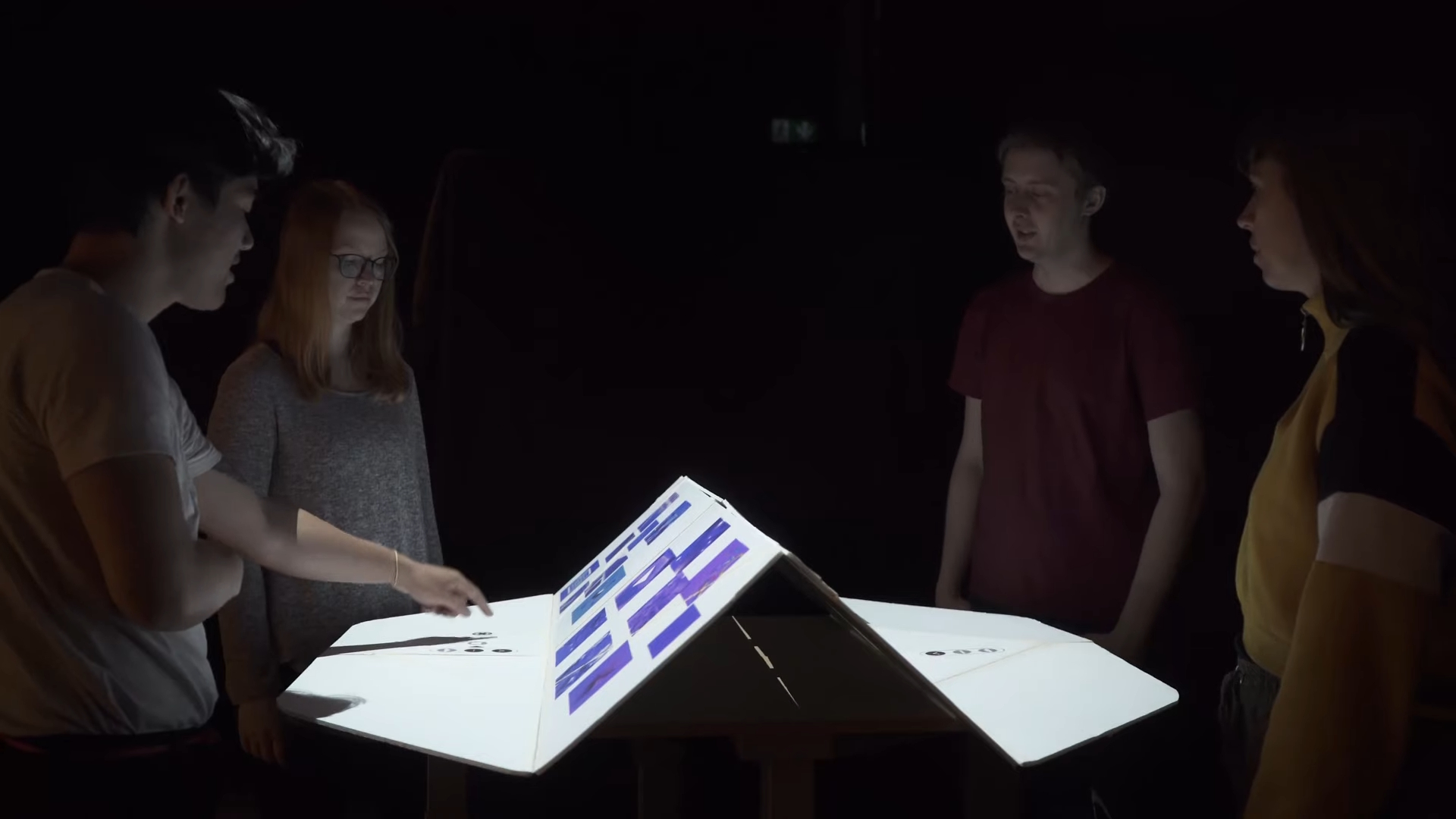

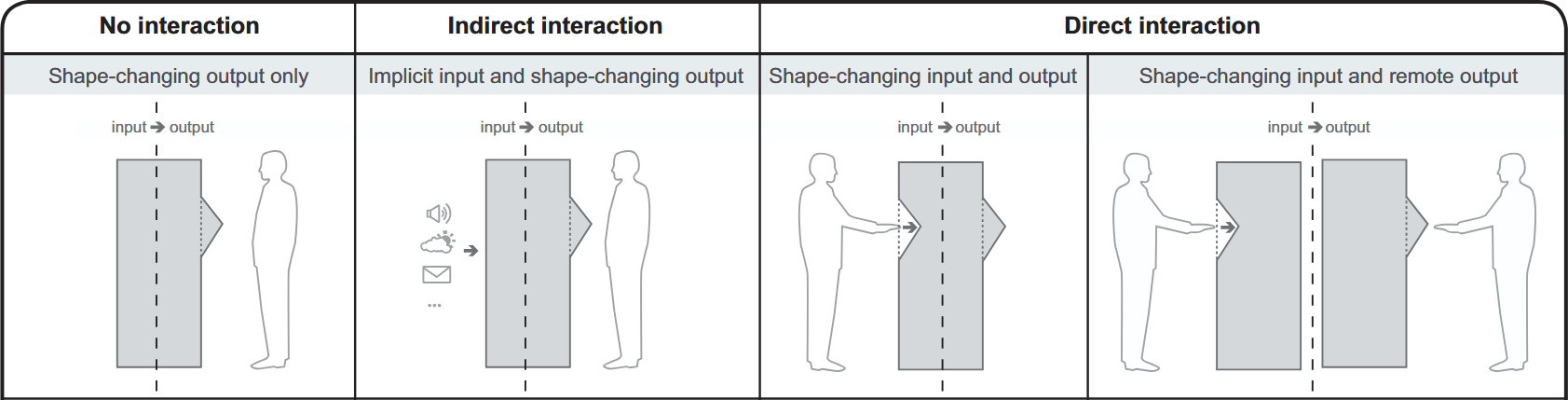

Rasmussen et al. [25] identify three primary interaction types for shape-changing interfaces (See Figure 6 (a)):

No interaction – shape change is used solely as system output (see Figure 6 (b) and Figure 6 (c)).

Indirect interaction – shape change occurs in response to implicit input (see Figure 6 (d) and Figure 6 (e)).

Direct interaction – shape change functions as both input and output. Within direct interaction, they distinguish two subtypes:

- Action and reaction – users physically manipulate the interface’s shape, and the system responds with its own physical transformation (see Figure 6 (f) and Figure 6 (g)).

- Input and output – both the user and the system simultaneously manipulate the interface’s shape (see Figure 6 (h) and Figure 6 (i)).

Shape-Change Properties

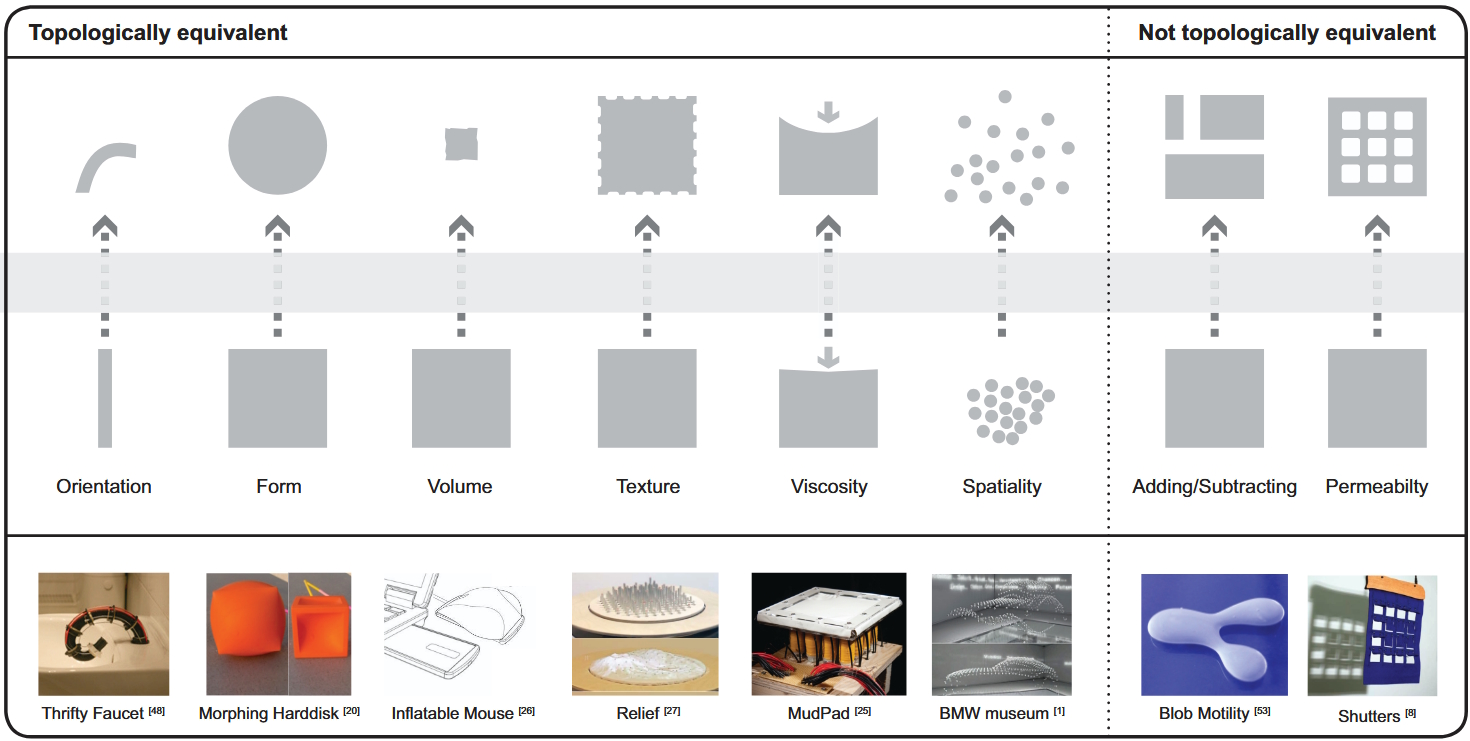

Rasmussen et al. [25] identify eight primary types of shape transformations : Orientation, Form, Volume, Texture, Viscosity, Spatiality, Adding/Subtracting, and Permeability. See Figure 7 for illustrations of these properties.

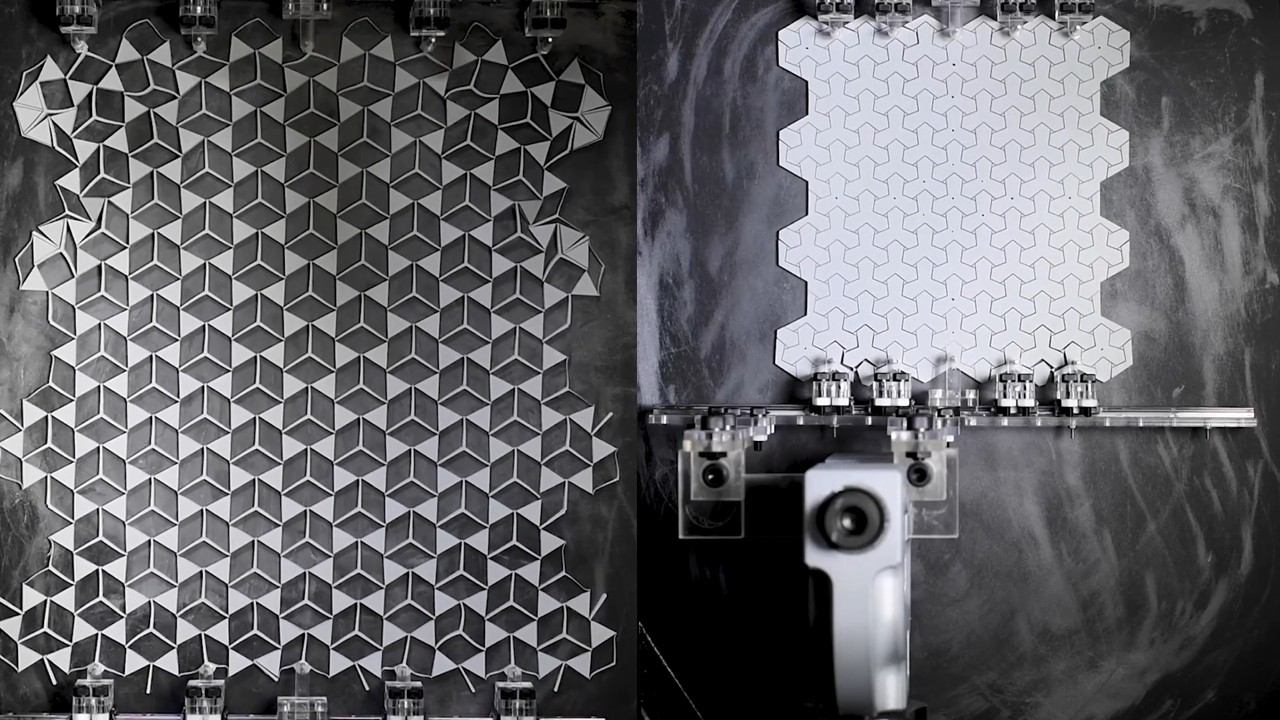

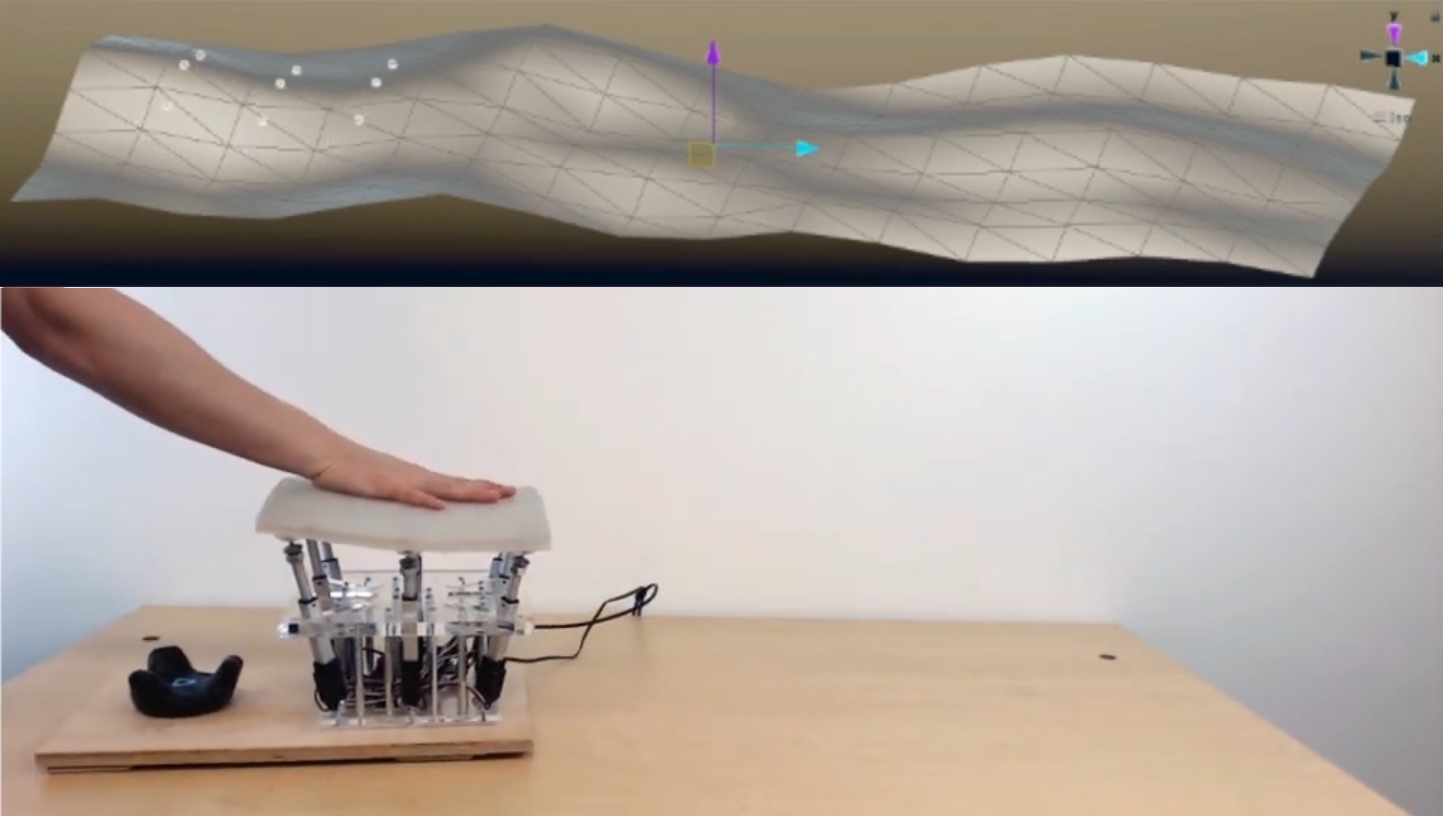

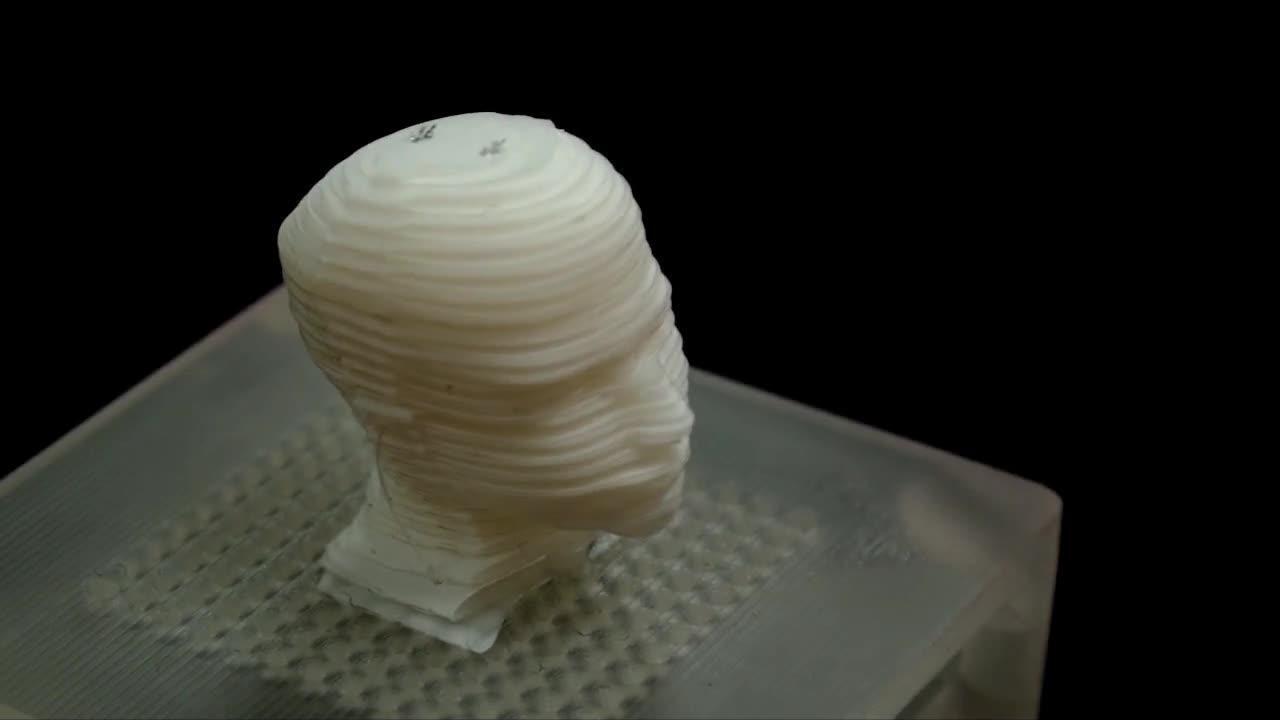

Architectures

To realize interfaces with physically reconfigurable geometry, researchers integrate combinations of sensors, hard or soft actuators, and smart materials into surfaces or volumes. These reconfigurable geometries can function as both input and output and are computationally controlled [1]. Various actuated architectures for SCIs have emerged in recent years as illustrated in Figure 8. Each of these architectures enables the exploration of one or more types of shape transformations. This diversity is necessary, as no single interface can realize all possible transformations, highlighting the current absence of fully programmable matter.

Research Agenda: Technology, User Behavior, Design, Ethics, Policy, and Sustainability

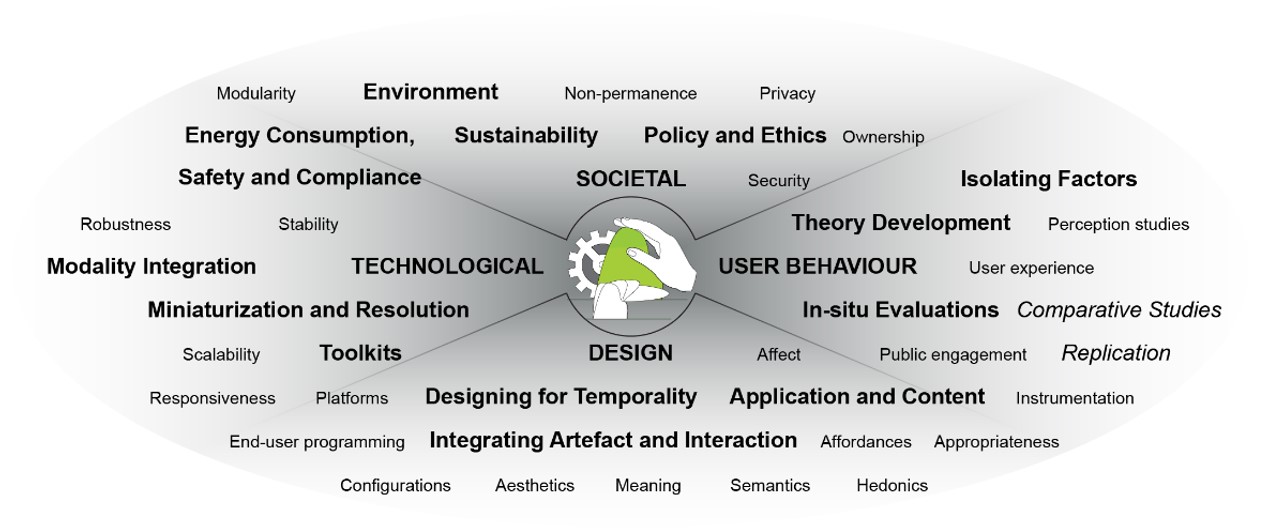

To advance SCIs, [1] identified twelve grand challenges across four domains: technology, user behavior, design, and policy, ethics, and sustainability. Figure 9 illustrates these grand challenges.

Technology

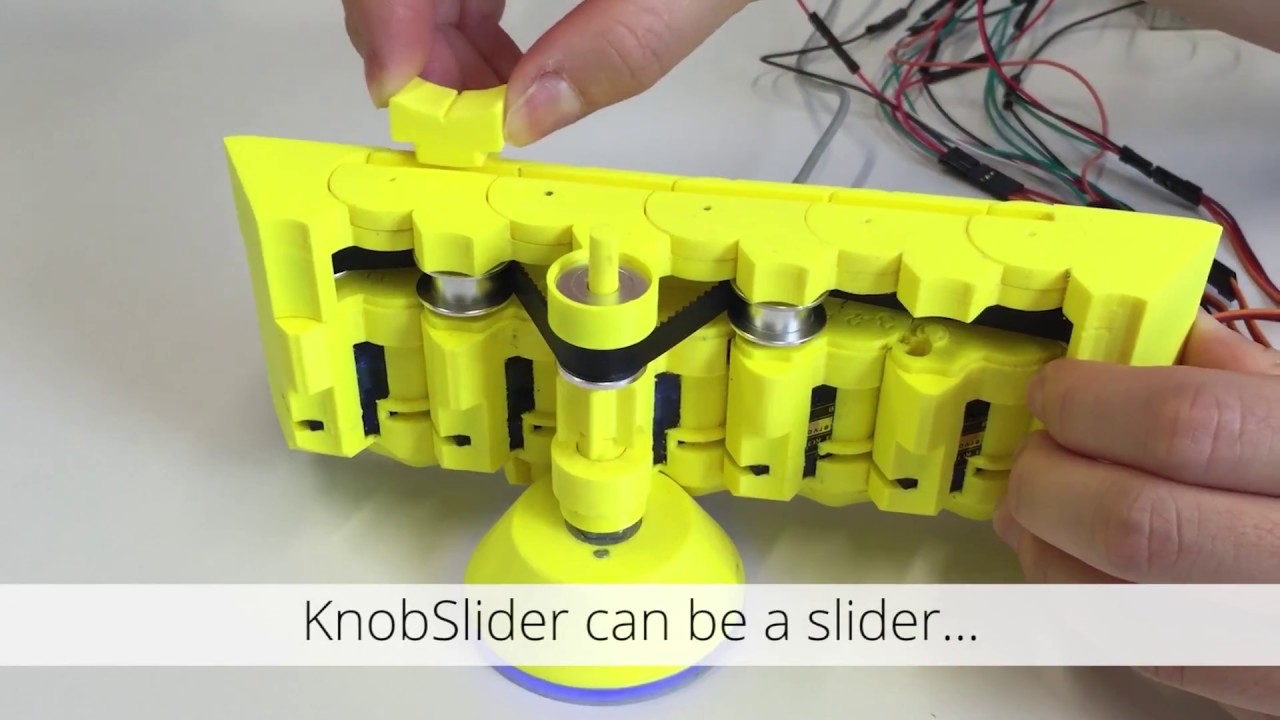

Toolkits for Prototyping of Shape-changing Hardware. There is a need to develop toolkits that facilitate the prototyping of shape-changing interfaces, which requires knowledge of complex electronics and mechanical engineering. This research aims to lower the implementation barrier by creating a standard platform for hardware prototyping, a cross-platform software layer, and tools for end-user programming, ultimately reducing the effort of classic interfaces by at least a factor of 10 in time and cost.

Miniaturized Device Form Factors and High Resolution. A significant challenge in shape-changing interfaces is the need for small, lightweight, and high-resolution actuators that can transition from stationary to mobile and wearable forms. Current electromechanical actuators often result in bulky setups, while user expectations demand high-resolution outputs. Addressing this challenge involves leveraging advancements in soft robotics and smart materials to create slimmer, more responsive actuation systems that can support a higher resolution of shape change.

Integration of Additional I/O Modalities. Today’s shape-changing interfaces need to integrate additional input and output modalities, such as high-resolution touch sensing and adjustable material properties, to realize their full potential. This can be achieved by incorporating off-the-shelf sensors and displays, as well as advances in flexible technologies that allow for conformal integration with actuators.

Non-functional Requirements. Energy consumption is a significant challenge in actuated systems, particularly in mobile or wearable solutions that must be self-contained, often relying on large batteries or having short battery life-spans. To address this, there is potential in systems design that reduces power consumption by offering (bi-)stable states, along with environmental energy harvesting and self-charging capabilities to ensure usability for at least a full day without recharging.

User Behavior

Understanding the User Experience of Shape-change. We should seek to understand the user experience (UX) of shape-change, as evaluating its effectiveness presents unique challenges. These include isolating the effects of combined modalities and assessing the robustness of current systems for in-depth evaluations. By characterizing the value of shape-changing interfaces, we can identify beneficial domains and tasks, supporting their design and construction. This involves conducting comparative studies and isolating factors to deepen our understanding of user interactions with shape-change.

Shape-Change Theory Building. Behavioural sciences construct theories to integrate empirical results and make predictions, yet shape-change research lacks such theorizing. Developing theoretical statements that articulate how shape-change affects interaction could help predict user reactions and enhance our understanding of its usefulness.

Design

Designing for Temporality. Shape-changing interfaces require temporal design, presenting challenges in translating behavioral sketches into actual designs due to the unique experiences users have with dynamic forms. While traditional design methods struggle to articulate these properties, inspiration from disciplines like dance and music can help develop techniques for designing and comparing temporal forms.

Integrating Artefact and Interaction. Designers of shape-changing interfaces must create devices that harmonize usability and aesthetics, engaging both the body and mind. This requires an understanding of theory, heuristics, and dynamic affordances, alongside the development of tools and methods that integrate artefact and interaction in the design process.

Application and Content Design. The design and implementation of applications and content for shape-changing interfaces present significant challenges, which can be categorized into four parts: when to apply shape-change, what shape-changes to implement, what applications to build, and how to design the content for those applications. It is crucial to develop frameworks and design principles that clarify the appropriate contexts for shape-change and establish consensus on shape-change semantics to avoid user confusion. Additionally, careful consideration of end-user content is essential, particularly for interfaces that utilize dynamic displays, necessitating the development of toolkits for content design across various formats.

Policy, Ethics, and Sustainability

Policy and Ethics. Policy-makers face the challenge of creating legislation that ensures the safe and ethical operation of shape-changing interfaces without stifling innovation. Key issues include safety, security, content appropriateness, ownership responsibility, and the implications of non-permanency in transformations.

Sustainability and the Environment. Shape-changing interfaces pose sustainability challenges due to their demand for natural resources and recycling difficulties, but their morphing ability could reduce the need for multiple devices, ultimately lowering resource requirements. Researchers should focus on developing long-lasting, modular interfaces that support upgrades and interoperability to enhance sustainability.

My Main Contributions

So far, I contributed three research projects in the field of Shape-Changing Interfaces that addressed some of these grand challenges.

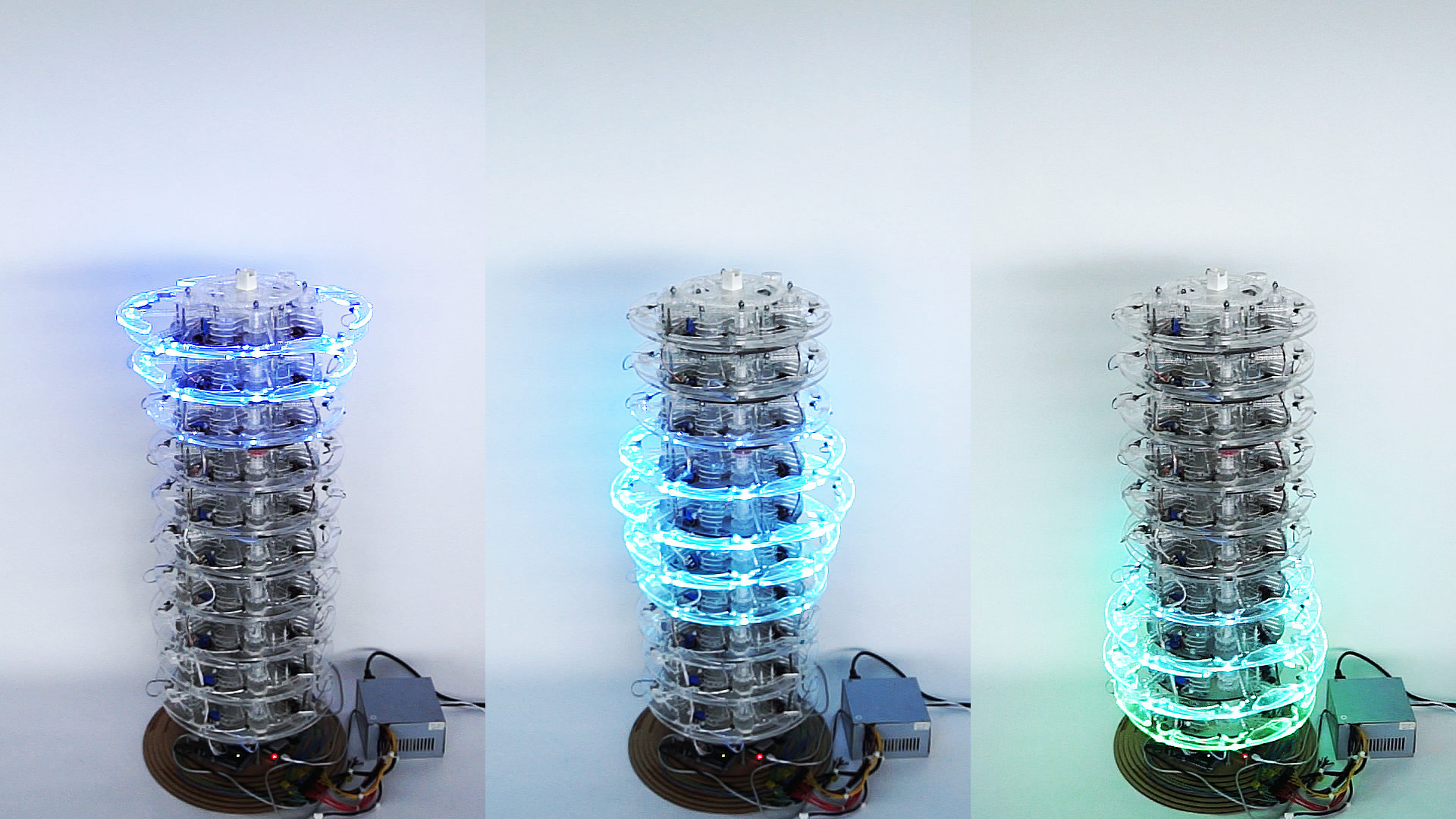

Expandable Illuminated Ring (2018)

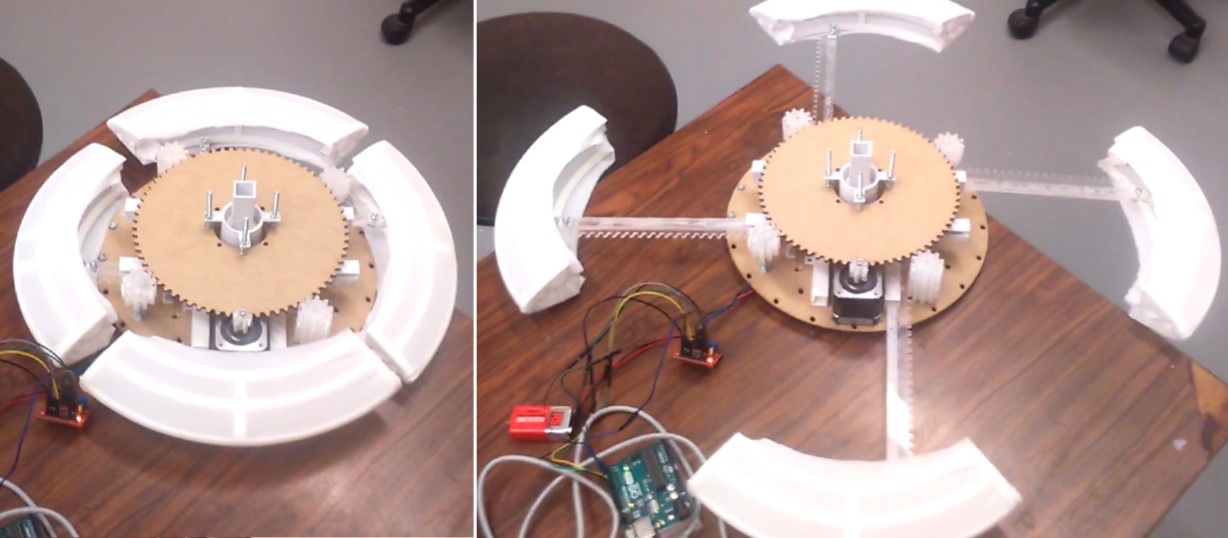

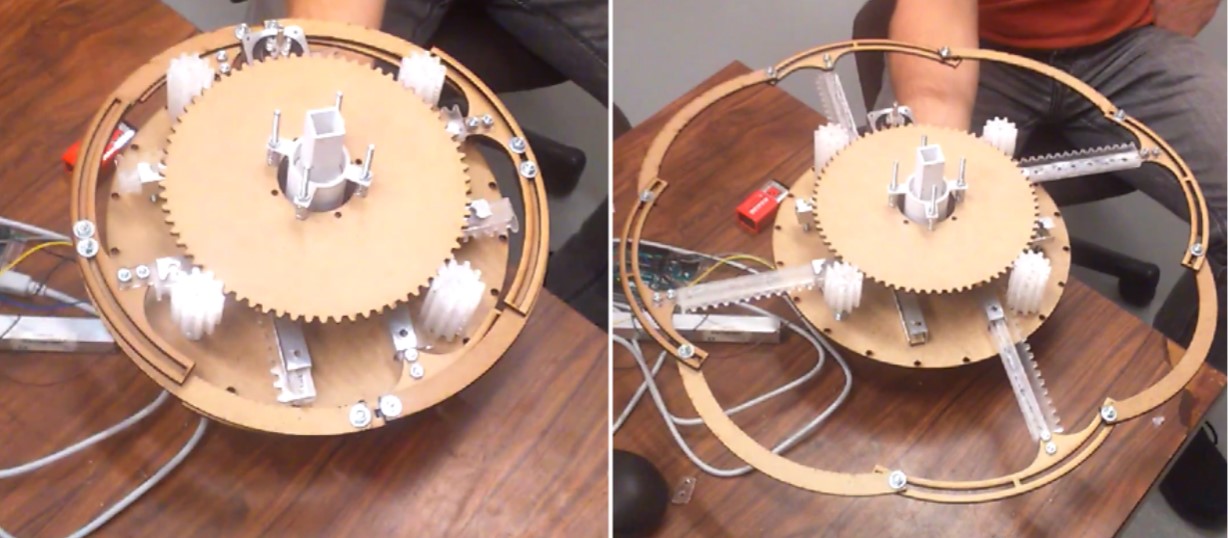

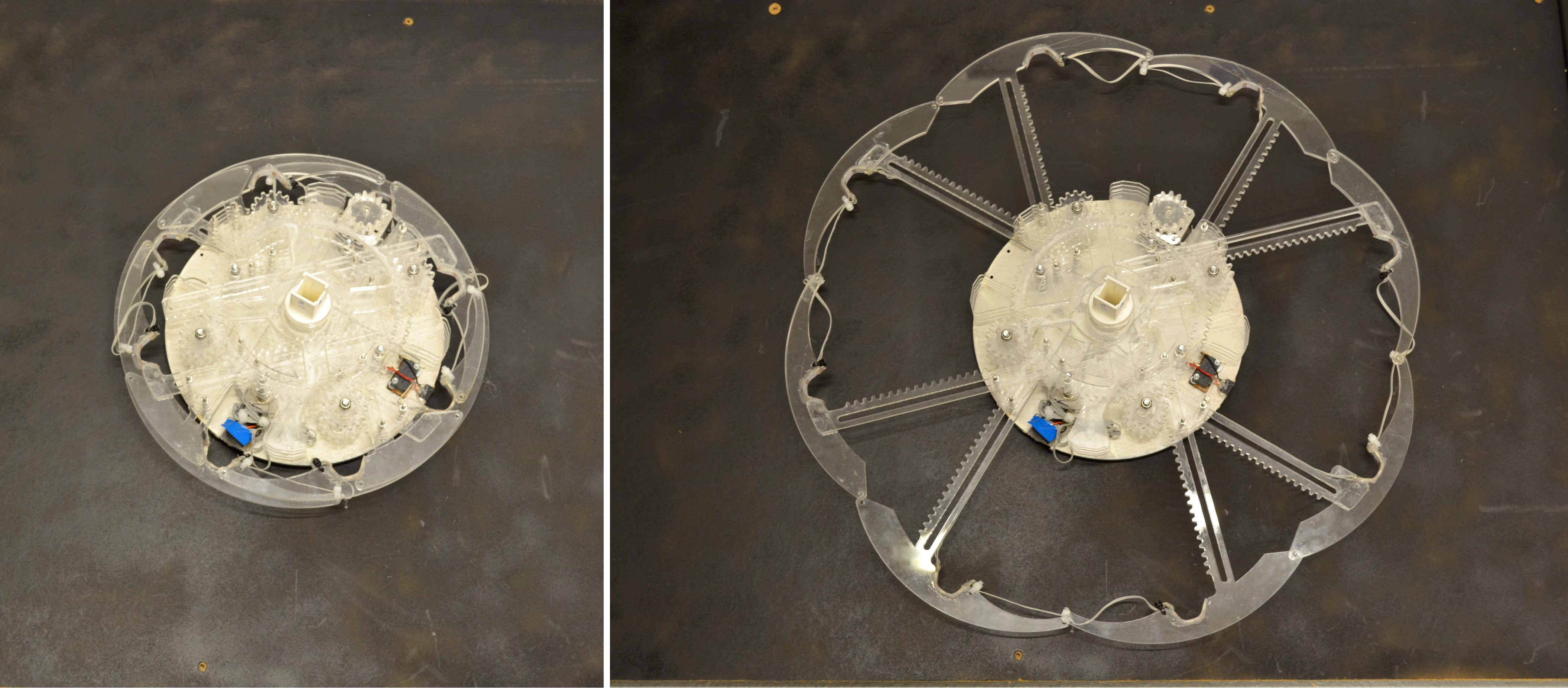

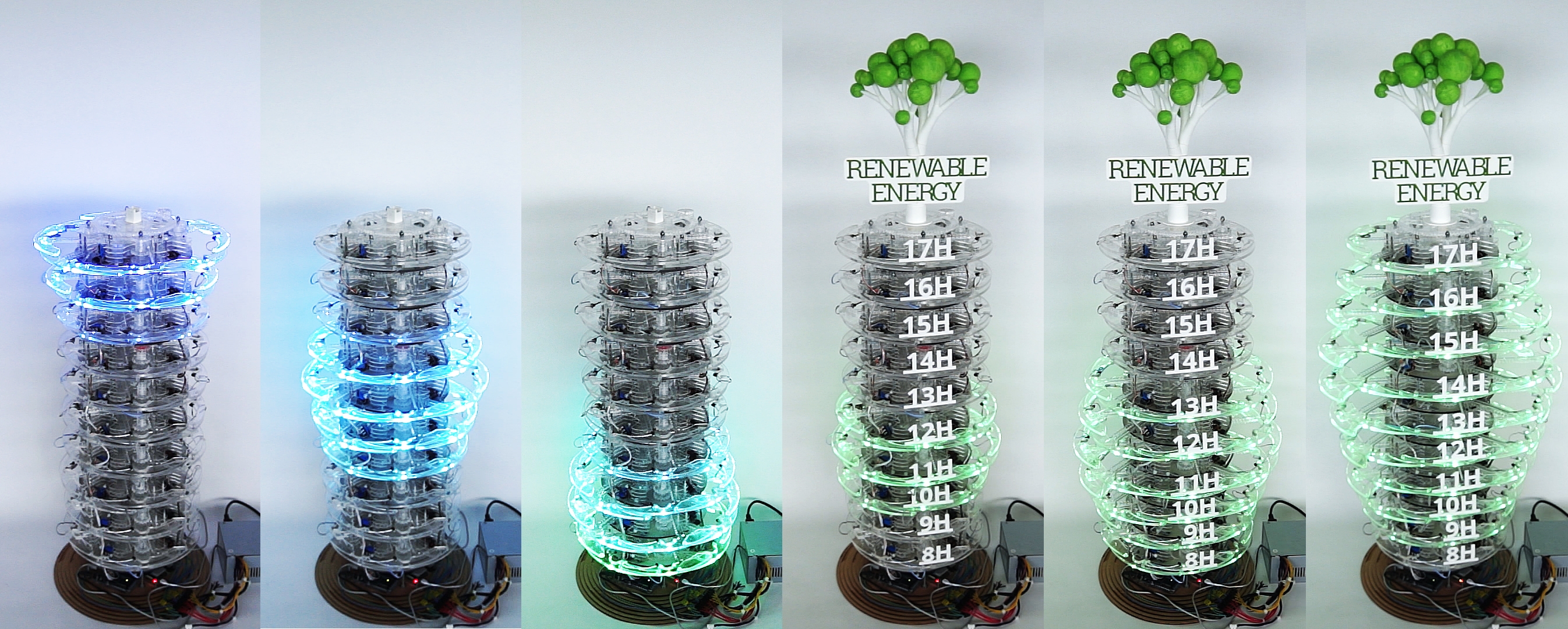

In [6], I presented the design and implementation of an expandable and stackable illuminated ring as a building block for ring-based shape displays. Ring-based shape displays are a type of shape-changing interface where the deformation or movement of the display is achieved through a system of rings. These rings can be mechanically actuated to create different shapes, surfaces, or structures in a controlled manner. We present the iterative design process of an expandable and stackable illuminated ring (Figure 10) – the building block of a ring-based shape display.

This project contributed to the grand challenges of Technology (toolkits for prototyping of shape-changing hardware, miniaturized device form factors and high resolution).

CairnFORM (2019)

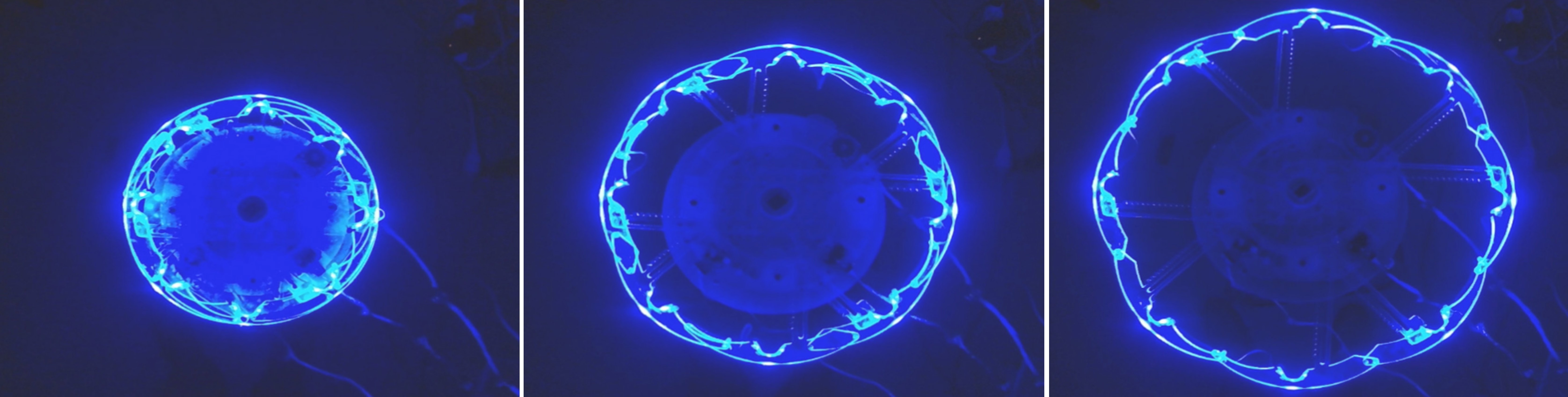

In [5], we introduced CairnFORM, (1) a stack of expandable illuminated rings for display that can change of axisymmetric shape (e.g., cone, double cone, cylinder). We show that axisymmetric shape-change can be used (2) for informing users around the display through data physicalization and (3) for unobtrusively notifying users around the display through peripheral interaction.

This project contributed to the grand challenges of Technology (miniaturized device form factors and high resolution) and Design (integrating artefact and interaction, application and content design).

CairnFORM² (2021)

In [8], we extend the understanding of the potential utility and usability of axisymmetric shape-change for display. We present (1) 16 new use cases for CairnFORM (Figure 12 (a)) and (2) a two-month comparative field study with CairnFORM in the workplace (Figure 12 (b)). Compared with flat-screen animations, early results show that cylindrical shape-change animations keep a better attractiveness over time.

This project contributed to the grand challenges of User Behavior (understanding the user experience of shape-change) and Design (application and content design).

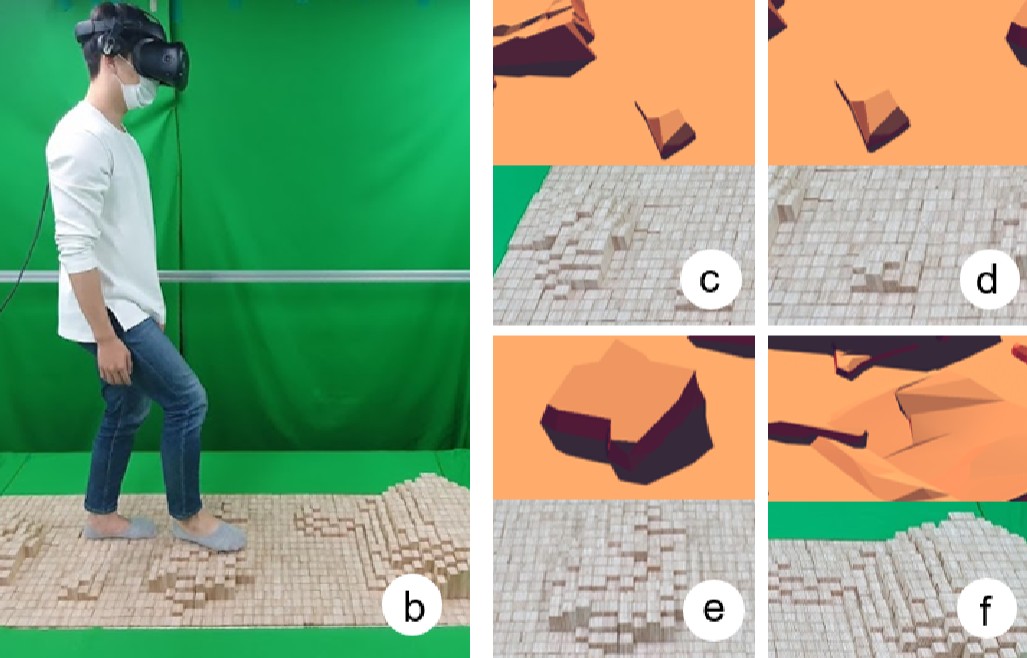

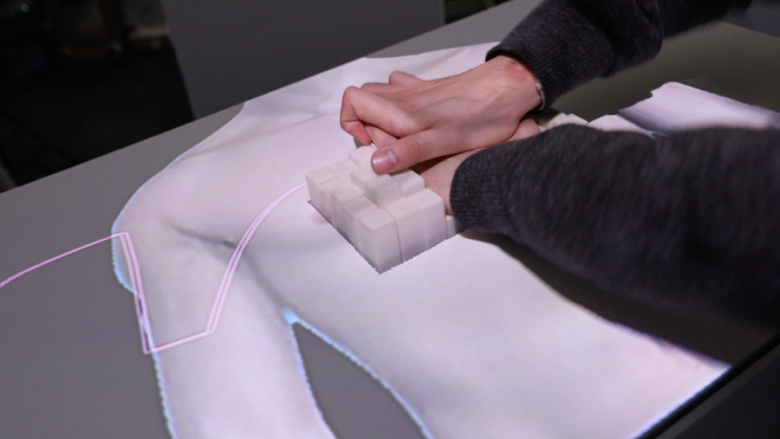

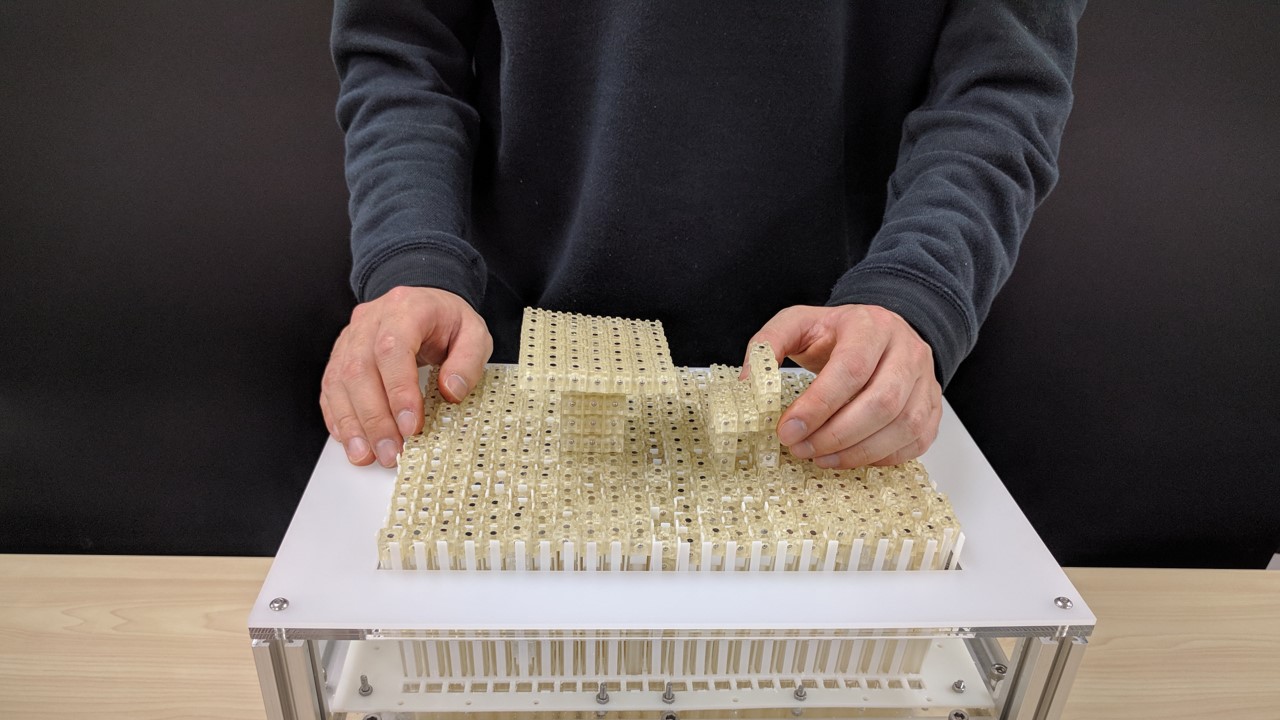

ShyPins (2025)

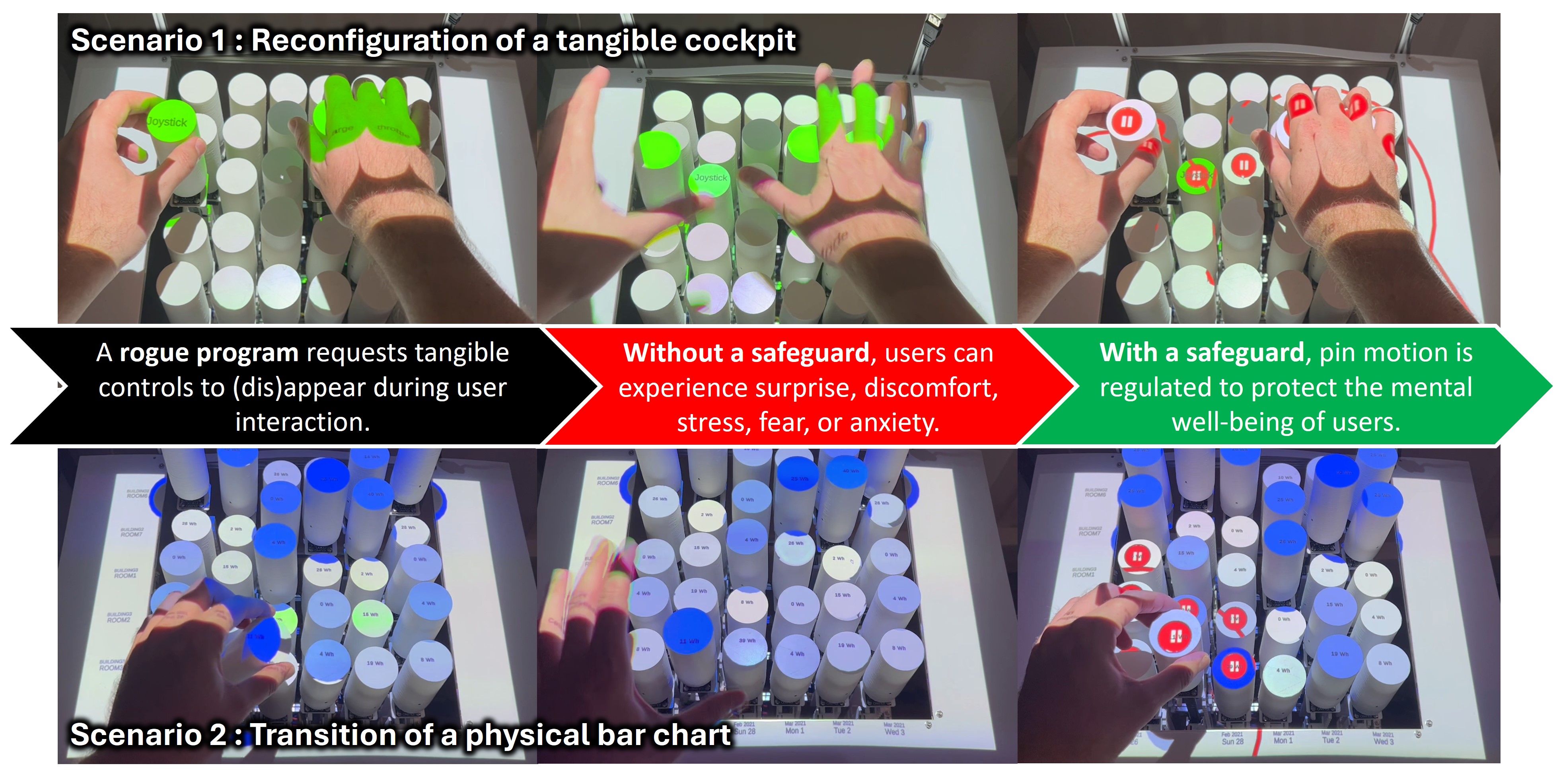

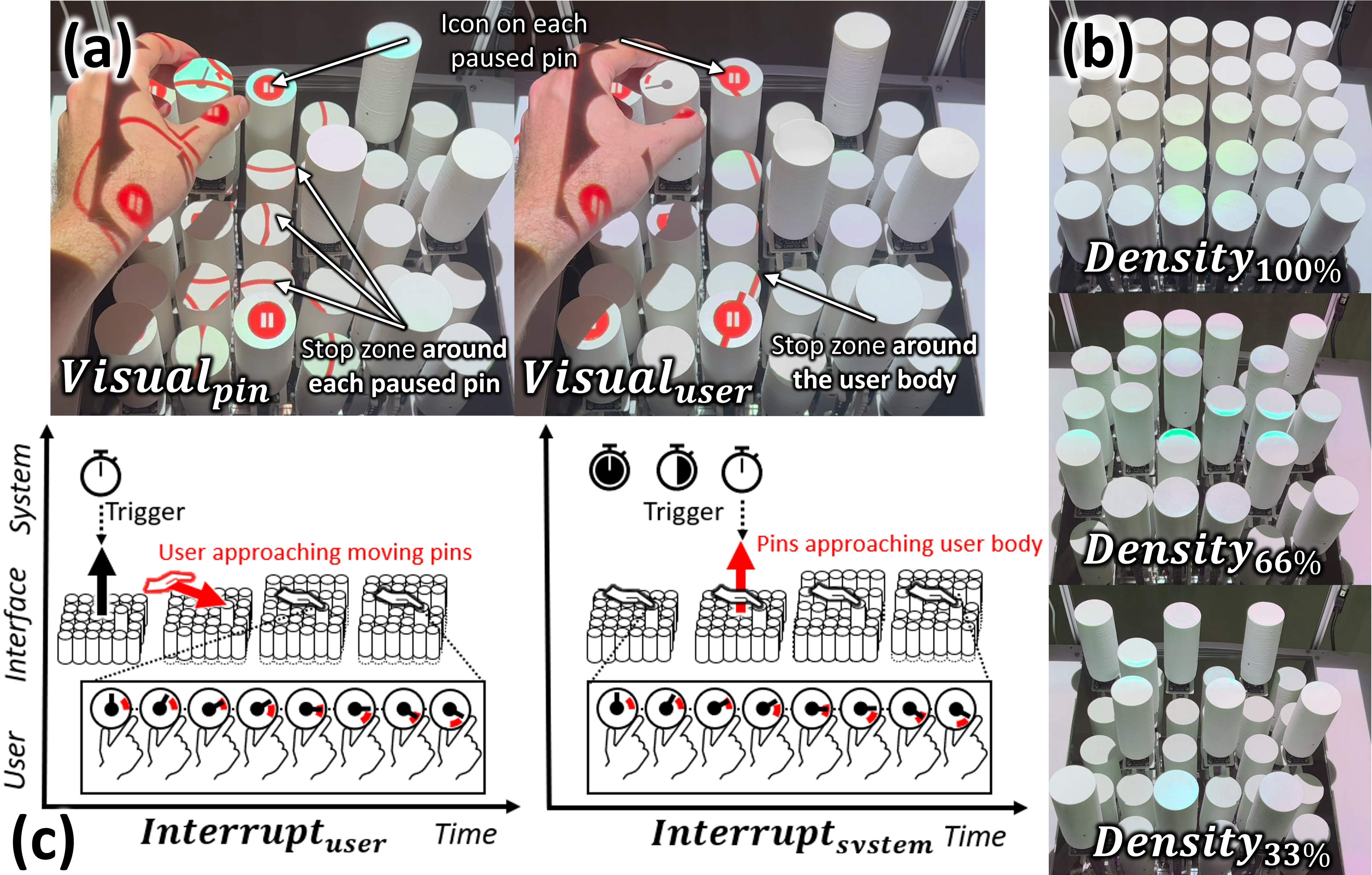

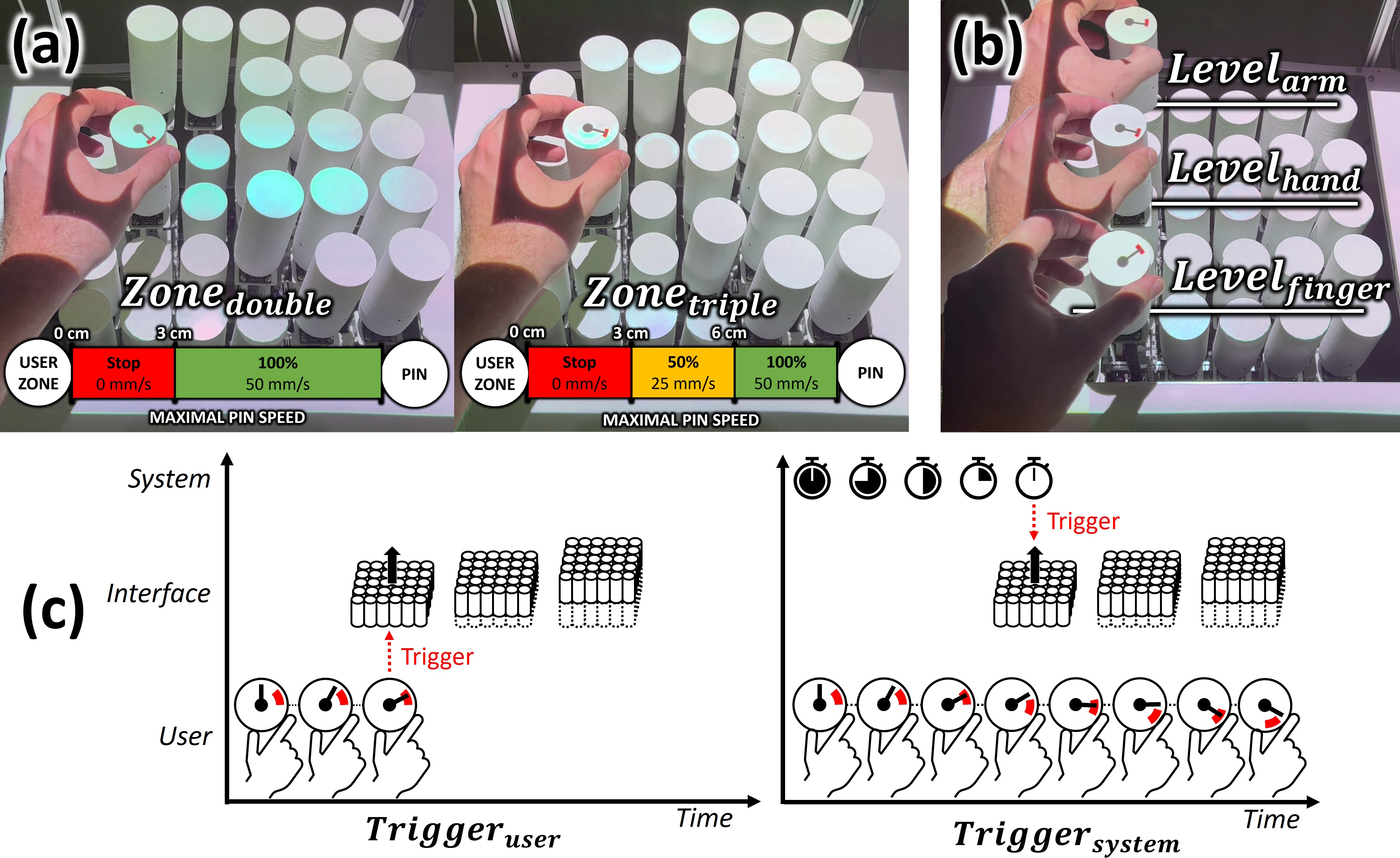

In [7], we introduced ShyPins, a pin-based shape display that implements a safety strategy to protect users’ physical and psychological well-being by regulating pin motion based on user proximity (Figure 13). Pin-based shape displays can cause discomfort or psychological harm when pins move forcefully against the user’s body; ShyPins addresses this by modulating actuation behavior as users approach. A user study showed that gradually reducing pin speed as users move closer is perceived as safer than abruptly stopping motion, while the perceived safety of motion pauses depends on user preferences in user-triggered actuation. Another study found that projecting user-centered stop zones improves perceived safety compared to pin-centered zones, and that users feel less safe when approaching moving pins than when pins move toward them.

This project contributed to the grand challenges of Technology (integration of additional I/O modalities), Policy, Ethics, and Sustainability (policy and ethics) and User Behavior (understanding the user experience of shape-change).